A Digital Marketer’s Step By Step Guide to A/B Testing

Share:

A/B testing is one of the first buzzwords that digital marketers learn in Advertising 101. But no matter how long you’ve been in the industry, it might be time to go back to basics to ensure your A/B testing is in tip-top shape. At Adlucent, we combine human ingenuity and automation to perform successful A/B tests that increase ROI for our clients. Our company mantra, “Better Every Day,” permeates our culture and our client partnerships as we continuously commit to outperformance. By the end of this article, you’ll have the foundation you need to get started on your next A/B test, so let’s dive right in.

WHAT IS A/B TESTING?

A/B testing is a versatile way to test new ideas and their efficacy. Unlike trial-and-error, it’s a scientific method to see whether an implemented optimization is truly effective or if performance shifts are due to chance. We can accomplish this by splitting part of the traffic (typically 50/50) into the original variation and half into a test variation to see if the results are statistically significant.

GETTING STARTED WITH A/B TESTING

How to Make a Strong A/B Test Question

Before running your test, define what KPIs (key performance indicators) are most important. Choose one key metric to focus on. Otherwise, you won’t know how to define success. It is not enough to ask, “will changing this ad variation improve performance?” Instead, think granularly.

- Will this creative ad variation increase clicks and CTR (click-through rate)?

- What if your test has a higher CTR but lower CVR (conversion rate)?

- What if your test has orders but a lower AOV (average order value)?

How Do I Split Traffic?

Once you’ve established KPIs and defined your test question, how do you get two random samples to split your original and test variations? One way is to use an online tool, such as Google Ads, which can randomly split traffic for you. You can also do it yourself – one method we use at Adlucent is creating a geo-split that results in two groups of locations with historically similar performance. Then, we can run the original variation in one split and the test variation in the other.

You’ll also want to consider what percentage of traffic should drive to the original variation vs. the test variation. The basic setup is 50/50 – 50% of traffic to one variation and 50% to the other. But you may want a different split, such as 75% on the original and 25% on the test. You may feel more comfortable dedicating only 25% to a new variation, especially if the test is risky – just note that it will take longer to have statistically significant results.

How Long Should I Run an A/B Test?

In short, 2-8 weeks is the average length of a test, but it depends on the sample size. We strongly recommend a sample size of at least 400 users for each group (400 for variation A and 400 for variation B). Why this number? 400 is the number that statistical tables and survey calculators recommend for sample sizes in hypothesis testing when we have a very large population.

- In a test where the variation difference is on the SERP (search engine results pages), this would be 400 impressions per group.

- In a test where the variation difference is on the landing page, this would be 400 clicks per group, since only people who click would be impacted by the difference.

- However, the test length will be a holistic decision based on traffic levels and the impact of the test results.

How to Interpret A/B Test Results

As mentioned earlier, we want to see our key metrics show significantly better results in the variation than the original – meaning the difference is so unlikely that it was most likely due to the change in the test variation rather than by chance.

But let’s consider another factor besides statistical significance. Suppose we maintain the same or increased traffic levels and see higher CTR – does that mean we immediately switch our ad copy to the new variation? It depends. Even if the difference in CTR is statistically significant, that does not mean it is practically significant. In other words, is the lift in our test metric significant enough that it would be worth making the change? If it’s as simple as finding/replacing ad copy, then a slight boost would be worth rolling out. But if the price is steeper – maybe heavy technical work to change a landing page or lower margin from running a promo, we may not want to make a change even if the test returns statistically significant results.

HOW TO SET UP AN A/B TEST IN GOOGLE ADS

This is a great section to reference over and over again when you’re setting up your test. We’ll give a step-by-step guide on using the A/B testing feature in Google Ads; a widespread digital advertising platform. A/B testing is universal, though, and great for other channels – so don’t feel limited!

- Scroll down to the bottom section of the left navigation bar and click “Experiments” then “All Experiments.”

- From here, click the blue plus button to get started.

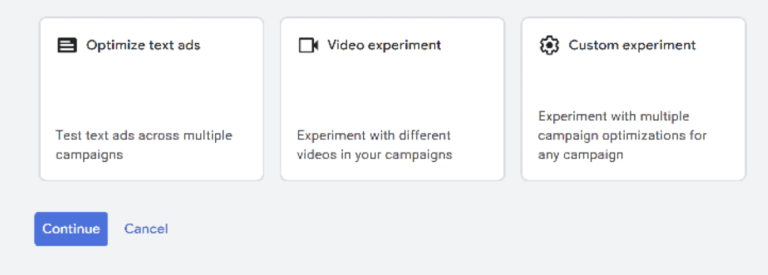

- Once you create a new experiment, there are only three options.

- Optimize text ads – Test two ad variations by finding/replacing ad copy across one or multiple campaigns. This option is specifically designed for ad copy testing.

- Video experiment – A/B testing on video/YouTube campaigns on Google Ads.

- Custom experiment – The most versatile option – make multiple changes of almost any kind, such as landing pages, bidding strategy and budget, targeting, etc.

- Since we’re focusing on Paid Search in this guide, let’s look at the first and third options: Optimize text ads and Custom experiments.

How to Run Split Test Ads in Google

- The “Optimize Text Ads” option in Google Ads experiments is specifically for ad copy testing. First, select which campaign(s) and ad types you want to set aside for testing.

- Second, use Google’s efficient find and replace function with setting up your control and experiment ad variations. The control is the original variation, and the experiment would be the new text you want to test.

- Finally, give your variation a name and set the experiment dates and traffic split.

- Now you can get started. Google Ads UI will show you the individual performance of the original and modified ads and an overall look at performance at the top.

Custom Experiments in Google Ads

- If you want to test something other than ad variations in Google Ads, Custom Experiments is the method to choose. First, set a name and description and choose a base campaign for testing – note that you can select only one campaign in Custom Experiments, so we recommend choosing a campaign with significant traffic.

- Now, the experiment will show as a copy of the base campaign, and you can make a change (or multiple changes) in the UI that will apply only to the experiment.

- Then select standard experiment options and schedule.

- Finally, you want to decide whether to sync changes. It’s ideal to leave this on so that you control all factors between the base and experiment campaign and change ONLY the aspect(s) you are testing. For example, bid management should sync between the original and experiment campaigns if you’re testing a landing page.

- Once you set up the test, you will see a panel similar to the one for ad variation testing. Now take a step back and let the data come in.

A/B TESTING: A CASE STUDY

The proof is in the pudding. Recently, our client, UrbanStems, came to us with a question. Will the words “$10 off” perform better than “10% off”? We ran an A/B test in one of our top campaigns using a Custom Experiment in Google Ads – changing the ad copy mentioning “$10 off” to advertise “10% off.”

The results? We saw a 6.13% CTR in the $10 off variation vs. a 5.87% CTR in the 10% off variation. In addition to CTR, we also saw 5% lower impressions and 9% lower clicks on the experiment. With these numbers, we could confidently conclude that the original ($10 off) was the better contender than the experiment (10% off).

But, we didn’t stop there. We decided to test how “$10 off” would fare against “15% off”. This time, we tried an Ad Variation specific test to run the “15% off” messaging in two major campaigns. The results were stark – the “15% off” variation performed at a higher CTR than $10 off (5.5% VS 4.54%). Furthermore, “15% off” saw five times the number of impressions and six times the number of clicks. As a result, we changed our “$10 off” promo messaging to “15% off” across the account.

CRITICAL THINKING

At Adlucent, we are constantly re-evaluating our methods. For example, analyzing the previous case study, is CTR the best metric for ad copy testing – or do other metrics provide a stronger performance indicator? One contender for an ad copy metric is Profit Per Impression, which takes into consideration CTR, CVR, AOV, and CPC – all of which can be affected by ad copy.

You may have an ad copy variation that makes users click, but if the products on the website don’t live up to the ad copy’s expectations, a lower CVR may negate the benefits.

Similarly, digital marketers can overemphasize CVR in landing page tests. Even if CVR seems to improve, is traffic down (which can inflate CVR)? And what about AOV, the other half of revenue? A metric that accounts for both CVR and AOV – such as RPC (revenue per click) – may provide better context.

Conclusion

In short, don’t settle for complacency – one of the most exciting aspects of testing (and of life) is to think critically about how to be accurate yet efficient. Testing is only as good as the methods behind it, so take a step back and consider how you’re testing before jumping in.

So, what are the takeaways? First, A/B testing is a process – you can make a change and test, and then try something else and test it too. This feedback loop helps us live by our motto; “Better Every Day.” Little changes add up and make a big difference.

A/B testing requires time and thought to set up and run, but the exhilaration of seeing a new idea improve performance is worth the effort. Whatever optimization you are looking for today, consider how A/B testing can help you get there.

Are you interested in going even deeper in your performance metrics testing? Take your testing to the next level with Deep Search™; the digital advertising platform that’s powered by automation + backed by human ingenuity. Reach out today to learn more.

Rigel Kim

Rigel is an Account Manager at Adlucent with a penchant for creating a story from data and statistical analysis. She has years of experience across various clients and marketing channels (from ecommerce to in-store, B2C to B2B, Search to Display to Video), combining irreplaceable human insights with increasing Martech and automation.

More Resources

Blog Post

January 9, 2025

Blog Post

October 9, 2024

10 Holiday Stats Every Retail Marketer Should Know

Discover 10 essential holiday shopping stats for retail marketers in 2024. Learn how to boost your campaigns with insights on omnichannel strategies, video ads, BNPL, and more."

Blog Post

September 5, 2024

Adlucent Awarded Google Marketing Platform Certified Partner Status, Strengthening Client Solutions

Adlucent, the performance media, analytics, and data agency that recently joined forces with BarkleyOKRP, is officially a Google Marketing Platform (GMP) Certified Partner for Display & Video 360 (DV360), Campaign Manager 360 (CM360), and Search Ads 360 (SA360).